I've got some vibsplaining to do...

A brief history of vibe *ing with AI, and a primer on what it means for work, life and the future

It feels like years ago now, but back in February, lesser-known OpenAI co-founder Andrej Karpathy coined the term "vibe coding" with his post on X, describing it as coding where you "fully give in to the vibes, embrace exponentials, and forget that the code even exists." The term caught fire and suddenly everyone was vibe coding, vibe writing, vibe thinking.

Developers actually started coding with the help of LLMs back in 2021 when Github released Copilot as a developer plugin for code-writing tools like Microsoft's Visual Studio Code (VS Code). The plugin was an instant hit, not because it wrote code from prompts, but because it anticipated the code you were writing and could complete the code for you, saving you time. Programmers have long been aware that reinventing the wheel is a mandatory recursive part of coding. But it's often the process of rewriting code that gives you comprehensive understanding, and the ability to fix things when they break. So code completion feels like magic because it looks at the context of the code, then recognizes the start of the pattern, and anticipates the rest with a spooky level of accuracy.

I quickly took to Copilot, as did a vast number of developers. It was a massive productivity boost, some claiming a 2x increase, or more, in coding speed. But Copilot was a different animal than the AI "vibe coding" tools we see today — this is because Copilot was largely trained on Github's vast trove of open source and public code, then purpose-built a pattern completion recommender. These days, the big foundation models power the vibe coding tools, which are general purpose large language models optimized for prompt-driven response output. Code execution is a small corner of their capability, which may explain some of their unpredictability.

From the moment Copilot launched, there was immediate pushback from companies adopting this new technology. This is because the suggested auto-complete code was often proprietary, and there's a long history of source code intellectual property battles — code writing is considered a form of creative expression, similar to composing music or writing a book. Many of the code repositories used to train these models have commercially restrictive or undisclosed licenses, making their use in commercial applications problematic.

The technology powering Copilot in 2021, OpenAI's Codex, was also demonstrating early prompt-driven code writing. In a demo from 2021, two of OpenAI's founders show game logic written in Javascript from a sequence of prompts. Anthropic promoted code generation in their initial Claude release in 2023. Google's Gemini and other foundation models followed suit, promoting their own code generating capabilities. As an early user of these features, the results were generally a mixed bag, but fun and interesting regardless.

This is all to say that by the time the word "vibe coding" appeared, there was a growing use of LLMs to generate code, and in some cases, entire projects. But the term quickly reverberated across the influencer world as the unlock for non-technical folks to "vibe" fully functional apps with just a few prompts. The idea caught fire and drove mass adoption of apps like Cursor, Lovable, v0, and many other no/low-code development solutions. Lovable hit $100m in revenue in 8 months — the fastest growth of any company in history.

What's incredible about startups like Lovable and Cursor is that they are "wrapper" services, which means they use foundation models, which provide the bulk of the service’s "magic," then add application-specific features like VS Code integration. The foundation models do the heavy lifting, but these companies nailed the product-market fit at exactly the right moment. Their ability to retain users will be their biggest test. When Anthropic launched Claude Code, I cancelled my Cursor subscription the next day.

Now that "vibe coding" has been a thing for 6 months, I feel a little retrospective is in order. Users have spent an estimated $250m on vibe coding startup platforms like Cursor and Lovable. Anthropic's paid "Max" accounts, plus token use overage fees, now account for close to $1b ARR, which can largely be attributed to coding use. Which means people are spending a lot on vibe coding. Seasoned developers are now claiming around 15% - 60% efficiency gains from AI-assisted development, which feels about right from my experience. But for non-developers pouring money into no-code solutions, there's little data I can find, though I'm constantly hearing about frustrations from friends and colleagues who are trying.

There are a couple key challenges with vibe coding. First is a pesky complexity problem I wrote about recently that seems to stop forward progress at around 80% completion. Without technical expertise, it can be very difficult to overcome a vibe-coded meltdown. Even with decent coding skills, it often means learning the entire code base, which wipes out a large portion of the efficiency gain. The other problem is what I'd call the confidence illusion, which is the complete lack of pushback from your AI helper as you find yourself tackling problems far above your skill level. I've never once had Claude Code say "are you sure you want to do it that way?", although I really wish it would.

This brings us to the bigger challenges, the existential ones: threats to future models, the role of human innovation and cognition, and the societal and environmental cost of AI (don’t worry, it’s not all doom and gloom).

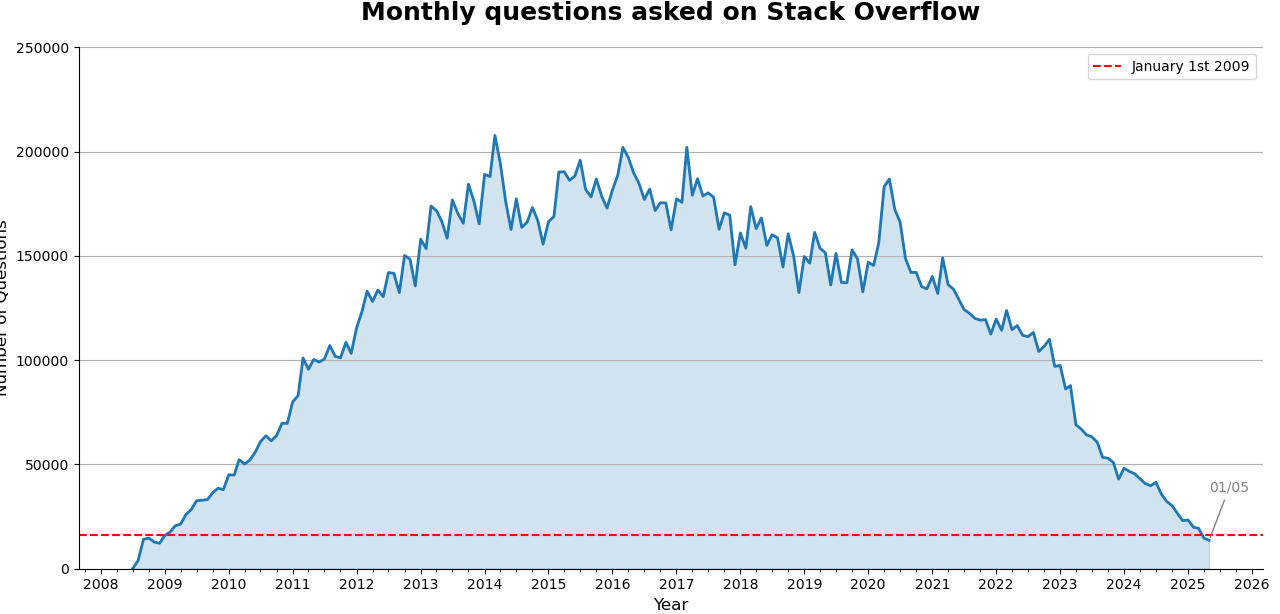

First, there's growing concern about 'model collapse' — the digital equivalence to inbreeding. The primary issue is the deep well of training knowledge is drying up, leading to a plateau of capability and a shrinking, backwards-looking pool of knowledge. The two primary sources of coding know-how are Github and Stack Overflow. Before Copilot and Claude Code, I'd have Stack Overflow persistently open to find and discuss coding challenges — it was my second brain. Every developer I know used it constantly as a lifeline for coding issues. Stack Overflow has seen an incredible 95% drop in usage since AI's rise, showing a radical change to the source of coding knowledge. This is a huge problem for future model training. And Github is now swamped with half-baked, vibe-coded projects from amateur developers, and will likely become the dominant source of code training for models. It's easy to see the result having diminishing returns.

Next, human ingenuity stems from our ability to explore genuine unknowns — spaces where concepts don't yet exist and language hasn't formed to describe them. While AI systems excel at recombining and extrapolating from existing human knowledge, they’re constrained by the linguistic and conceptual frameworks of their training data, offering a sophisticated but backwards-facing reflection of what humanity has already thought and expressed. True innovation often emerges from pre-linguistic intuition and embodied experience, from humans who can navigate uncharted territory where the vocabulary itself hasn't been invented yet. AI augmentation is already proving to have a negative impact on human cognition and critical thinking.

The findings revealed a significant negative correlation between frequent AI tool usage and critical thinking abilities, mediated by increased cognitive offloading.

- AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking

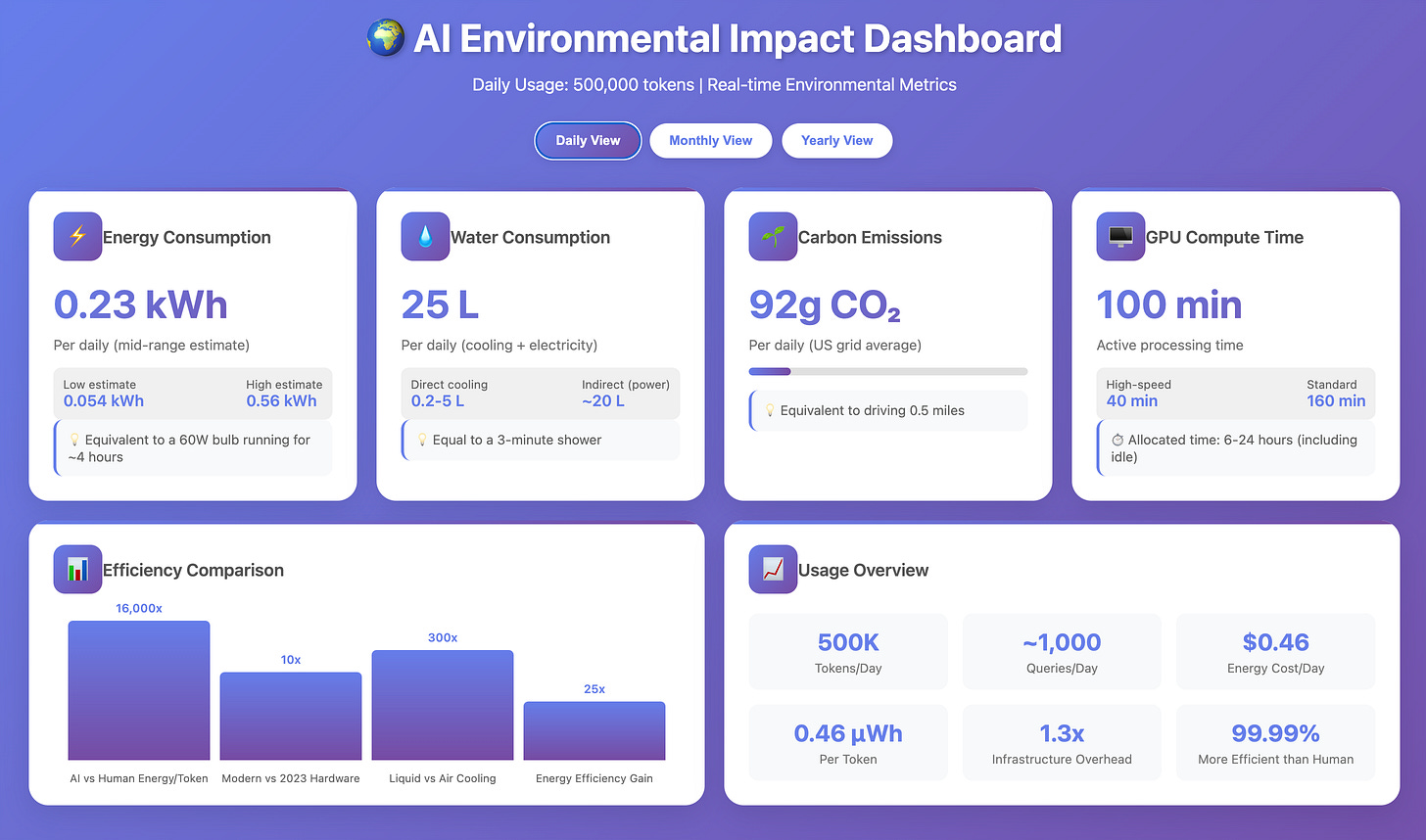

And lastly, the relentless pursuit of optimization driven solely by financial metrics has created a dangerous feedback loop where technological advancement increasingly takes precedence over human welfare. We've reached a telling inflection point, where data center investment now outpaces investment in human workspaces. This while the energy infrastructure required to sustain AI's growing computational demands directly competes with the needs of lower-income communities for affordable power. At precisely the moment when we should be reducing our energy dependence and environmental footprint, we're instead accelerating extraction and consumption in service of profit margins. This isn't an abstract concern about sustainability — it's a concrete redistribution of resources away from human communities toward machine infrastructure, optimizing for computational efficiency while externalizing the environmental and social costs. While there are promising countertrends: the development of local and edge AI, genuine efficiency improvements, and signs that the exponential growth curve may be recalibrating, these trends remain insufficient without a fundamental reassessment of our success metrics. We need to confront the reality that our current trajectory prioritizes technological capability over human flourishing, creating a world optimized for machines rather than the people they were meant to serve.

So where does all this leave me? A bit conflicted, but also inspired. As a longtime creative technologist, I feel AI-assisted coding partially solves a problem I’ve long struggled with, which is context switching between creative and engineering mindset. Much of the heavy lifting is handled by AI, which lets me remain focused on the holistic product or idea. Not always good vibes, it often feel I'm working with a senior engineer who's also a toddler with full-blown ADHD, but still has enough value to make it worth it.

Beyond coding, the AI vibe trend seems to be spreading. Vibe designing, vibe writing, vibe bio engineering, vibe space exploring, vibe investing, vibe marketing, and so on. The term has become synonymous with doing something without the needed experience or rigor, which to me feels like an illusion, and often ignores the skills gap between amateur and professional. I believe generative AI and LLMs will become just another abstraction layer on top of the many layers of technology beneath, and the people who can skillfully wield those tools will become the experts in their fields.

In the meantime, in our nascent and clunky state of applied AI, I recently vibed a comic about my experience with vibe coding gone sideways, which it often does.

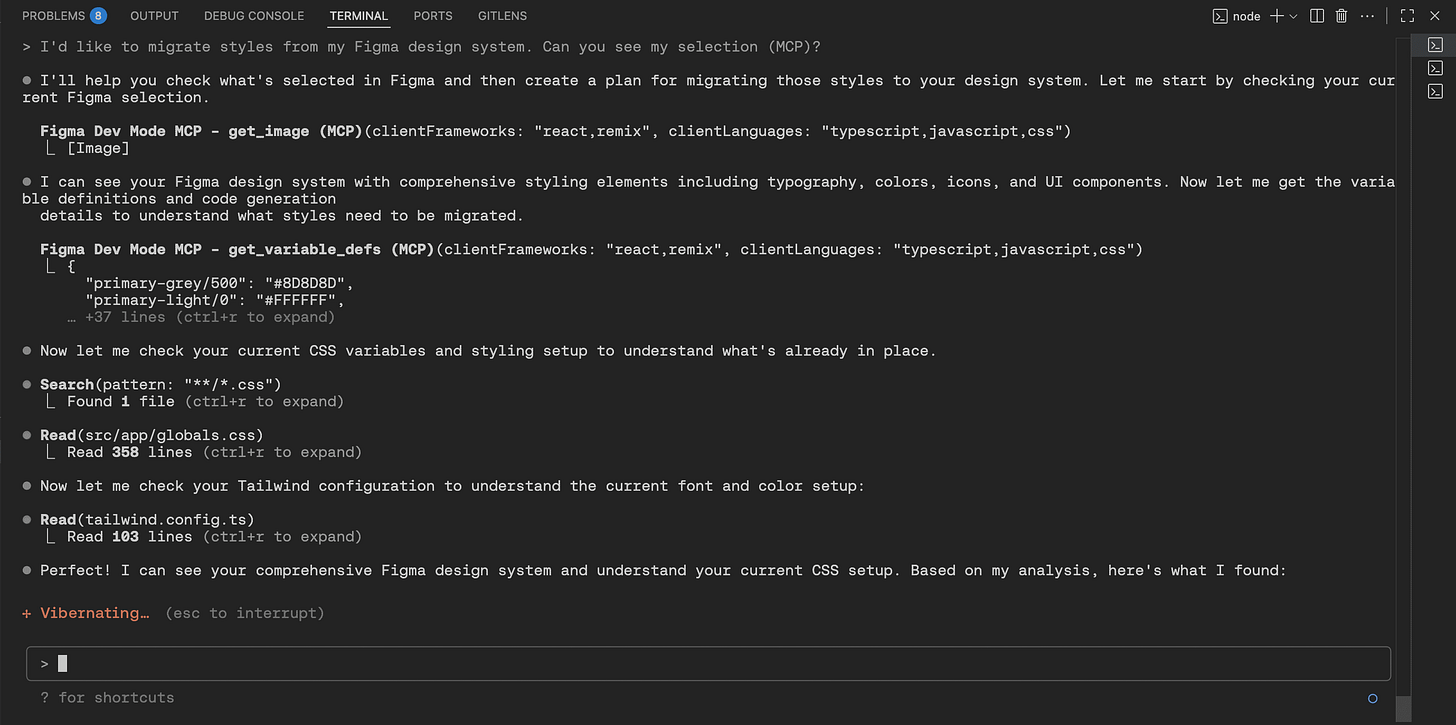

For anyone curious, I’m using VS Code with Claude Code Max. I focus on the setup and planning before I start writing any code. I use MCP to route my designs directly from Figma, and Context7 for current framework documentation. I'm loving their "explanatory" output style, which I toggle on to learn about the approach the model is taking for solutions I don't understand well. I take time to review every piece of code written, and I frequently interrupt and redirect. If you have some coding skills and haven’t played with Claude Code, I highly recommend it. Here’s a great getting started guide and video.

I’d love to hear about what’s working and not for you! Success stories are always welcome. Share any tips/tricks, challenge any of my thinking, and help lift our collective dinghies, even if just a morsel. ❤️

love this: "Next, human ingenuity stems from our ability to explore genuine unknowns — spaces where concepts don't yet exist and language hasn't formed to describe them. While AI systems excel at recombining and extrapolating from existing human knowledge, they’re constrained by the linguistic and conceptual frameworks of their training data, offering a sophisticated but backwards-facing reflection of what humanity has already thought and expressed. True innovation often emerges from pre-linguistic intuition and embodied experience, from humans who can navigate uncharted territory where the vocabulary itself hasn't been invented yet. AI augmentation is already proving to have a negative impact on human cognition and critical thinking."

Ammon - excellent post. I, like many others have felt seen and heard when we saw the vibe-coding comic strip. Your point on energy being redirected to data centers vs humans hit home, especially ever since I read Altman’s comment on how much water usage each query costs.

I remain conflicted though. I have not been this energized in trying to experiment and build something in a very long time. How usable those apps are are another question altogether.

Here's what has been working so far for me. I'm not super technical, but know the basics and learn as I go.

1. I write my idea of the app that I want to build. Explicitly ask Gemini/ChatGPT/Claude not to build it, but give an idea of how it would build an app for personal use. I’ll explicitly state that I need all the tech stack for each step and ask it to give me multiple options. Give me reasoning on why an option is recommended vs another and ask it to tell me what order I need to build it.

Side note: I feel like with V0s and Lovables of the world, we have been sold UI first, when my experience (from coding years ago) has been the opposite. Define your data model, your API and then you can beautify your code using V0/Lovable. So I have gone back and rightly so. Otherwise you’re stuck making the UI pretty but it doesn’t really do much (which might be fine for demos). I also feel that being UI first you get junk data when I want to use real ones.

2. Gemini 2.5 Pro is a good starting point for actual code. Gemini Flash, ChatGPT and Claude have been good for providing reasoning in thinking and basic bug fixes.

3. Here’s my tech stack: React/next.js via V0, Flask/Python for the API and Supabase for the scripts and edge functions.

4. Since the only paid plans I have are with V0 and Perplexity Pro, I am bound by companies’ free tiers. So, I must switch between each model’s suggestions and run it against the other once the limit is reached. It does have me confused the next day– like who gave me what suggestion! But it’s also a way to test their reasoning and make sure that I’m being led on the right path.

For context, I used data.gov to do a tariff tracking app. It’s about 80% complete since I still need to figure out how to get the “web agent/cron job” working to get the latest JSON when a new version lands.

My evaluation is this:

I am not getting anything built and shipped over a weekend with my free plans and my skill set – yet.

I feel that I can create personal projects, but not something that others can use - yet.

How do I make it secure and not have vulnerability attacks? That is still a gnarly issue for me. I’m sure there are other tools that do it for you, but I do miss a human code reviewer!

I continue to remain hopeful of forward progress..